When I started U.S. Air Force pilot training in 1979, it wasn’t uncommon for the service to lose an airplane every month. On average we lost five Cessna T-37s, our primary trainer, and seven Northrop T-38s, our advanced trainer, annually. Even the operational Air Force was accustomed to these kinds of losses. The primary fighter that year was the F-4 Phantom II and it averaged two losses a year. And the heavy aircraft world was not immune. We lost a KC-135A tanker and C-141 cargo transport every two years. As we used to say back then, “You have to expect a few losses in a big operation.”

The most common refrain in class for us was, “You have to know this cold, or you will become a smoking hole in the desert.” We were at the former Williams AFB, near Phoenix, and most of our flying was over the desert. To get an idea of the mindset of the service back then, a good case study would be the crash of a North American XB-70 Valkyrie on June 8, 1966.

The Valkyrie was designed to meet the requirements of a 1955 proposal for a bomber that would be fielded in 1963. The Soviets had just become nuclear capable, and we wanted a long-range bomber to hit them in their territory before they had a chance to hit us. The biggest threat to our bombers at the time was their interceptors, so this airplane was supposed to fly very high, very fast. Nuclear bombs at the time were very large, so the airplane had to have a large payload. The XB-70 won the contract, with a promised top speed of Mach 3+ and altitude of 70,000 ft.

The airplane was both ahead of and behind the times. It took six engines using hybrid fuels to give it the required speed. While the engines burned twice as much gas as a conventional bomber, it flew at four times the speed. Its fuselage was designed to funnel the supersonic shock wave under the wings to provide compression lift, further improving its speed and fuel numbers. But by the time it started test flights, the mission had already changed. In 1960, the Soviets shot down a U-2 spy plane at around 70,000 ft., demonstrating the ability to use missiles to down aircraft at very high altitudes. The Air Force changed tactics to fly very low, beneath radar coverage, to penetrate enemy airspace. But once a weapon system’s procurement has begun, the Defense Department is rarely willing to cancel.

But Secretary of Defense Robert McNamara, over the objections of the Air Force, was able to do just that, killing the program in 1962. Two XB-70s had been built and were relegated to conducting advanced studies of aerodynamics and propulsion. On June 8, 1966, someone at General Electric thought it would be a great idea to have photos of the XB-70 flying formation with an F-4 Phantom, F-5, T-38 and an F-104 Starfighter. All five were powered by GE engines, after all. But then once the photo shoot was completed, the F-104 drifted too close to the XB-70 and was pulled in and over it, severing the XB-70’s tail in the process. Both the F-104 and XB-70 crashed, killing the fighter pilot and the bomber’s copilot. The XB-70 pilot was able to eject.

The photo of the XB-70’s “smoking hole in the desert” haunts many of us veterans from that era. How is it we can lose sight of the mission and later of our safety procedures? It seems the original mission morphs, preempting the original, and then the new mission blinds us to our safety procedures. It is a problem that confronts every flying organization.

I think we can take the lessons from that smoking hole to look for signals from a flight operation in danger of similarly permitting disaster. Retired space shuttle commander Jim Wetherbee wrote about this in his excellent book, Controlling Risk in a Dangerous World. He writes that “every potential accident gives signals before it becomes an accident.” He has a list of five common technical, systems and managerial conditions that existed in various organizations before they experienced major or minor accidents:

(1) Emphasized organizational results rather than the quality of individual activities.

(2) Stopped searching for vulnerabilities — didn’t think a disaster would occur.

(3) Didn’t create or use an effective assurance process.

(4) Allowed violations of rules, policies and procedures.

(5) Some leaders and operators were not sufficiently competent.

Organizational Results Over Individual Actions

U.S. Navy Capt. (ret.) Wetherbee says that individuals within an organization don’t create results; they conduct activities. Results are important, of course, but it is the quality of activities that creates the quality of results. Most of us have examples in which organizations to which we belonged became so goal oriented as to become unsafe. Here is one of my earliest examples.

On Sept. 4, 1980, I flew a KC-135A tanker from Honolulu to Andersen AFB, Guam. It was a flight of 3,294 nm and took 8.4 hr. I was looking forward to meeting a friend of mine stationed at Grand Forks AFB, North Dakota, who was due to fly in the same day. He was flying a B-52 and I thought there might be a chance my airplane would refuel his. But fate had another scenario in mind.

In our tanker, Day One of the trip was to be from Loring AFB, Maine, to March AFB, California. After a night’s rest, we flew on to Honolulu and got another night off. The third day of the trip was to Guam. I knew my friend, “2nd Lt. X,” was scheduled to arrive in Guam about the same time. I didn’t know he was doing the trip nonstop. It was 5,852 nm and would take around 20 hr. with three air refuelings and a practice low-level bomb run along the way. I also didn’t know that the day before he left, President Jimmy Carter made a statement to the press that the B-52 could reach any target in the world in 24 hr. The Air Force higher ups decided they would prove that with this trip to Guam.

The B-52 had a crew of six back then: two pilots, two navigators, an electronics warfare officer and a gunner. X’s base decided they should have an extra pilot and navigator for the 24-hr. mission. The morning of the mission one of the pilots called in sick. The base decided they could go with just two pilots. Of course!

We arrived at Andersen AFB on schedule; it was a beautiful but humid day. The rest of my crew promptly went to bed while I checked out the command post to find out about X’s bomber. I was told it was a little late but en route and would be landing the next morning. The next day I heard the bomber had landed, but the crew was restricted to quarters, pending an investigation and possible punitive actions. For the next week there was no news at all. My crew was sent to Diego Garcia and I forgot about it for a while.

When I came back to Guam, I was surprised to see a note on my door from X, inviting me to dinner at the Officer’s Club. That night he let me know what had happened:

“We checked in with command post about 2 hr. out and gave them our ETA, which was to be right at 21 hr. They asked if we had enough gas to fly three more hours and we made the mistake of telling them yes. They told us to find a holding pattern and that under no circumstances were we to land with less than 24 hr. of flight time.”

He further explained that they decided to pull the throttles back, fly their maximum loitering speed, and let the autopilot handle the flying chores. “I guess we all fell asleep — all seven of us,” he said. “The base scrambled two fighters and they found us south of Guam a couple of hundred miles headed for the South Pole. None of us heard them on the radio. The command post told the fighters to get in front of us so their jet exhaust would shake our airplane, and it did. That’s what woke us up.”

By the time they got back on the ground they had their 24-hr. sortie and the base was contemplating throwing the book at the crew for falling asleep while flying, which was not allowed. In the end, saner heads prevailed, and the Strategic Air Command decided to look the other way.

This kind of mission myopia isn’t limited to the military. We see examples of it on a regular basis: the crash of a prototype, for example. From the original G159 through the wildly successful G550, Gulfstream had long defined business travel on the high end. The Gulfstream G650 was something that pushed the envelope further with its wider cabin, longer range and higher speeds. But the company promised takeoff and landing field lengths more akin to those of its smaller aircraft. The test pilots were tasked with validating those numbers, not determining what the numbers would be.

Of course, they had computer models to go on and were confident the promised results could be achieved. This was a mistake. A crew of four gave their lives trying to achieve the promised results on April 2, 2011. Subsequently, Gulfstream raised the numbers and the airplane has gone on to be its most successful type ever. I believe the process has been fixed — at least I hope it has. The emphasis is now on the process (activity) of testing the aircraft, and not on achieving goals (results).

While results are a good way to measure success, they can blind everyone into overlooking the quality of the process leading to those results. Just because the results were good doesn’t mean the process was optimal, safe, or advisable. That is especially true when the cost of the desired results is too high.

Not Searching for Flaws

Wetherbee found in his research of accidents that managers usually thought their teams were performing well before the disaster occurred. Those of us who have played a quality assurance role in our organizations owe upper management an accurate picture of that quality. There is pressure to say things are great, of course. But quite often we want the news to be good and so it is. (Until it isn’t.)

In 2002, I fell for one of the oldest tricks in the book used against flight examiners. It goes like this: Please pass this pair of Pilot in Command (PIC) upgrade candidates. We know both have weaknesses, but we will only pair each with the strongest copilots to obtain the little seasoning needed. I did this once in the Air Force and regretted it — and did it again while flying the Challenger 604 and that didn’t work out any better.

My Challenger flight department was collapsing upon itself after our company agreed to a buyout. We had racked up several years of high-tempo operations flying all over the world without so much as a scratch on any of our airplanes or people. But once it became known the flight department would be disbanded, we started losing experienced pilots and started hiring anyone with a pulse. One such pulsing pilot I’ll call Peter.

Peter was a good guy and a fair stick and rudder pilot but a lousy decision maker. I gave him his pass to qualify as SIC while every other pilot then in our group was of the caliber to help with Peter’s seasoning. However, a year later, after losing four experienced pilots and hiring four new ones, there was a push to make Peter a PIC. I initially resisted, but when you run out of bodies, what are you going to do? He would be a domestic-only PIC; what could go wrong?

In July of that year Peter and a contract pilot flew from Houston to Bedford, Massachusetts, landing around 9 p.m. My crew took over the airplane for the rest of the trip to Athens, Greece. The ramp was exceptionally dark and the only thing unusual about the crew swap was that their flight attendant, let’s call her Patricia, had to be helped off the airplane. I asked Peter what happened to her and he said it was “nothing to worry about.” When we landed in Ireland for fuel it was still dark. After the passengers awakened from their inflight naps they asked about Patricia’s condition. They told us that it had been so turbulent descending into Boston that Patricia had been thrown about the cabin like a ping pong ball. Once we landed in Athens we saw that the nose of the aircraft showed evidence of hail damage.

Of course, Peter denied flying through a thunderstorm. But then I caught up with the contract pilot, who admitted that they had done just that, but he was a contract pilot and “what was I supposed to do?” Nobody looks good in any of this, me included. I should have shown more character and refused to upgrade Peter when I did. Our flight department pushed for upgrades and I didn’t push back hard enough. This kind of push and failed pushback can have catastrophic results.

On Feb. 1, 2003, the space shuttle Columbia broke apart during atmospheric reentry, killing all seven crewmembers. A piece of foam insulation had separated from one of the two external fuel tanks during launch and struck the spacecraft’s left wing. The damage was enough to breach the integrity of the heat tiles and hot atmospheric gases entered the wing during reentry. The damage destroyed the internal wing structure, causing the spacecraft to become unstable and fail catastrophically.

The accident was more tragic than just a retelling of the sequence of events because this kind of damage had been noticed several times before, causing anywhere from minor to near-disastrous results. The accident investigation focused on the foam and the organizational culture at NASA that caused its members to ignore the warning signs. But the culture at NASA goes deeper still since the three major accidents in its spaceflight history involve repetition.

The time leading up to the Jan. 27, 1967, Apollo 1 test explosion was one of urgency to meet President John F. Kennedy’s deadline to place a man on the moon before the decade was out. The Mercury and Gemini programs had gone very well and they were ahead of the timeline. NASA believed shortcuts in the capsule’s cabin environment (100% oxygen) and materials (non-flammability not required) were justified in that the mission was a national priority and it had taken adequate precautions. Nothing could go wrong.

The Jan. 28, 1986, launch of space shuttle Challenger was to begin the 25th orbital flight. NASA’s stated objective for the mission was to make shuttle flights operational and routine. It had gradually lowered the lowest acceptable ambient air temperature for launch, overriding objections of engineers responsible for O-rings used to join segments of the solid rocket boosters. On this particular launch, the O-rings became brittle and failed. The shuttle exploded 73 sec. after launch. Nothing could go wrong.

In all three accidents there were engineers and managers who knew something was wrong, but there were higher level managers who refused to believe it.

There is a common thread between my upgrade of Peter and an injured flight attendant, the hail damage and NASA’s Apollo and space shuttle accidents. In all, the organizations got comfortable and stopped thinking about what could go wrong. If you spot an organization with this level of complacency, watch out.

No Effective Assurance Process

Wetherbee also noted that prior to accidents, many of the managers involved did not understand how to create an effective process of assurance, nor did they understand its value. In an operational organization, providing assurance means a person is giving confidence about future performance to another person, or group, based on observations or assessments of past and current activities. That can’t happen unless management is willing to listen to the operators as well as employ methods to ensure those same people are living up to the standards they have set.

Back in the 1980s, I was a member of an Air Force Boeing 707 (EC-135J) squadron in Hawaii whose mission was to support the U.S. Navy and its submarine fleet in the Pacific. In 1984, while I was at a three-month-long flight safety officer school, the Navy brought its submarines back from their former “westpac” orbits off the coasts of Korea and the USSR to “eastpac” missions right off the coast of California. As a result, our mission changed from Korea, Japan and the Philippines to California. The squadron set up a staging operation at March AFB, Riverside, California. It all seemed pretty straightforward.

Well, it would have been except the squadron had a change in leadership about a year prior and the new squadron commander set about replacing every subordinate officer who wasn’t spring-loaded to a “yes, sir” response. Non-sycophants were shown the door. The commander kept the new mission to himself along with a chosen few until it became operational. Details were restricted on a “need to know” basis, so line pilots were denied a look at the mission until they actually flew it. Two months after returning from safety school and four months after the change in mission, I found myself at March in an airplane too heavy to safely take off if an engine failed at V1.

“What do you mean you can’t go?” the commander asked over the phone. “My staff has gone through this backward and forward. You either fly it or you can consider your flying days with my squadron over.”

As it turned out, the new staff didn’t have a lot of experience considering obstacle performance with an engine failed and did not factor the mountains just north of the airport along with higher temperatures. The previous aircraft commanders made sure they had the performance for their particular departure days and didn’t mention the plan was flawed, since the emperor didn’t like bad news. I had the first departure on a hot day since the plan had changed. But the squadron commander had signed off on the plan for year-round operations. Now he had to go back to the Navy and say he couldn’t do it. He was obviously furious.

But he was lucky since all he had to contend with was a little embarrassment and not notifying the next of kin that one of his crews had splattered themselves in the California mountains. Not everyone gets this lucky.

During the night of May 31, 2014, the pilots of Gulfstream IV N121JM failed to rotate and ended up in a fireball at the end of Runway 11 at Hanscom Field, Bedford, Massachusetts (KBED). Tower reported that the nose failed to lift off and the braking didn’t start until very late in the takeoff roll. As most of us with GIV experience suspected, the pilots forgot to release their gust lock prior to engine start and then tried to disengage it during the takeoff roll rather than abort and have to admit their mistake.

The NTSB described the actions of the two pilots as “habitual intentional noncompliance.” Many of us speculated that they trained with “Brand X,” but that wasn’t true. They trained with the same training provider that we use. We also hypothesized that these pilots never heard of a safety management system (SMS). Again, not true. They had been awarded their Stage II SMS rating. If only they had a flight operations quality assurance (FOQA) system. But they did.

When the details of the crash finally emerged, it came to light that these pilots put on an act when training, just to pass the check ride. But in daily operations they flew by their own rules, not using checklists, callouts or common sense. They had SMS certification, but it was a “pay your fee, get your certificate” operation. As for FOQA, it appears the data existed, but the program was not used. These were two pilots who were comfortable operating their very expensive jet as you would a beat-up pickup truck. And now they are dead. It is a tragedy compounded since they took innocent lives with them.

Many small organizations, like my squadron in Hawaii, are parts of a larger whole that has existing, and mandatory, robust safety assurance systems. Others, like N121JM’s flight department, are enrolled in assurance systems that are simply purchased. While most SMS auditors do a good job and try to get it right, some are out there to sell you the certificates and are unwilling to criticize the people signing their paychecks. Any organization willing to pencil-whip these assurance programs is courting the next accident.

Flaunting Rules, SOPs

According to Wetherbee’s study, accident investigators usually determine that some organizational rules, policies and procedures were violated before an accident. Often, the workforce reported unofficially that some managers were cognizant of these violations. I’ve noticed that the more senior and “special” an organization regards itself, the more likely this kind of negligence is to happen.

When I showed up as a copilot in our Hawaii Boeing 707 squadron, the biggest challenge for me was going to be learning how to air refuel as a receiver. Unlike the tanker that normally flew as a stable platform with the autopilot engaged, the receiver had to fly formation using old-fashioned stick and rudder skills. Just as I was getting the hang of it, one of the pilots talked the tanker into allowing him to fly fingertip formation, something reserved for smaller aircraft.

Air refueling formation is what is called “trail formation,” in that one airplane flies behind the other, albeit close enough to make physical contact. It requires a high level of training (and skill) but offers the advantage of an easily effected abort: The receiver pulls power the tanker adds. There is more to it than that, but you get the idea.

However, fingertip formation introduces a lot of variables from the high- and low-pressure zones of overlapping wings. There have been more than a few midair collisions with one airplane quite literally sucked into another. That was what I was thinking about when I was a passenger in the copilot’s seat watching the guy in the left seat fly fingertip formation with a tanker. One 200,000-lb. aircraft flying so close to another weighing almost as much, so closely that our left wing was underneath and just behind the tanker’s right wing. I asked our squadron commander about this and was told it was perfectly safe and we did it to keep our flying skills sharp.

A few months later there was a midair between a tanker and an AWACS airplane and the Air Force made it clear in no uncertain terms that anyone caught flying unauthorized formations in any aircraft would be getting a one-way ticket to Leavenworth, the military’s most infamous prison. All of a sudden, the fingertip formation program in our squadron went away.

A few years later, I joined the Air Force’s only Boeing 747 squadron (at the time) and shortly after I arrived I was medically grounded with cancer. I spent two months in a hospital and shortly after I returned the squadron commander was fired. There were videotapes circulating showing him flying fingertip formation with another of our 747s. In this case, there were two 600,000-lb. airplanes doing what I had seen in the smaller 707. I overheard him talking about it, acknowledging that he was fired and forced to retire. I think he got off easy.

The most unkind, and valid, insult ever given to an airline came from Robert Gandt in his excellent book Skygods: The Fall of Pan Am, when he wrote, “Pan Am was littering the islands of the Pacific with the hulks of Boeing jetliners.” By the close of 1973, Pan American World Airways had lost 10 Boeing 707s, not including one lost in a hijacking. At least seven of the 10 crashes were due to pilot error. Pan Am initiated a study to find out what was wrong. As the study was being conducted, its pilots crashed two more airplanes.

To get a feel for the carrier’s culture at the time, Gandt tells the story of a captain flying a visual approach into Hono-lulu International Airport in the days they shunned checklists or callouts. The captain simply flew the airplane as he thought best while the first officer did his best not to offend the “skygod.” Descending through 600 ft., the first officer asked the captain if he was ready for the landing gear. The captain exploded with rage, saying, “I’ll tell you when I want the landing gear.” Two and a half seconds later, with a great deal of authority, he said, “Gear down!”

The story doesn’t end the way you would expect. The captain reported the first officer’s temerity to the chief pilot, whose response was to tell the first officer that if he ever challenged another captain’s authority, he would be fired.

It is true that was a different time, but the culture at Pan Am was firmly established in the flying boat era: The captain was imperial. That vaunted status became a true danger with the arrival of jet-powered airliners. In fact, things got so bad, the FAA threatened to ground the airline. Subsequently, the company got rid of all those “skygods” and turned into one of the safest airlines in the world. But until then, it provided case study after case study on how not to run a crew.

In both my Boeing 707 and 747 squadrons, its members were considered performing what the Air Force called “special duty” assignments. We were outside the normal assignment process and getting hired required an interview with the squadron’s command staff, whose leadership felt above the normal rules of the Air Force. In both squadrons various Air Force rules were formally waived and both squadrons tended to bend those bent rules further. But in both cases, we were spared midair collisions because external forces managed to rein us back in. Pan American World Airways was not so lucky, but it managed to return to the fold after finally learning hard lessons.

Incompetent Leaders

Deficiencies in knowledge, skills or attitudes at any level in the organization, notes Wetherbee, can result in accidents. Qualified assessors should have been assigned to test knowledge and skills and evaluate attitudes of all people who were contributing to hazardous missions. One of my sister squadrons from my Boeing 707 days experienced this with tragic results.

The RC-135S was a Boeing 707 variant assigned a spying mission (the “R” stands for reconnaissance). In the late 1970s and early 1980s, an RC-135S could usually be found sitting in Shemya AFB, on Shemya Island, Alaska. The weather on this Aleutian Island was usually poor, but the location was key for monitoring a Soviet Union ballistic missile test area.

On March 15, 1981, an RC-135S landed short of the runway at Shemya, destroying the airplane and killing six of the 24 crew on board. The copilot had ducked under a precision approach radar (PAR) glidepath and the poor visibility at minimums fooled both pilots into thinking the landing could have been salvaged.

As with most aircraft accidents, there are many related causes, but the striking fact in this tragedy is that the squadron appeared to have very good pilots who flew into this hazardous airport routinely with great success. This particular copilot, however, had a history of flying below glidepath and his behavior appeared to be overlooked. Compounding the problem was that the squadron’s other pilots also may have tended to fly below glidepath but were able to get away with it due to a higher experience level. The copilot survived and was asked about the prohibition against ducking under in the Air Force instrument flying manual, called Air Force Manual 51-37. He said he thought that manual only applied to the T-37, the airplane he flew in pilot training.

I had undergone Air Force pilot training at about the same time as this copilot and also flew the T-37. I knew full well that AFM 51-37 applied to all Air Force airplanes. It seems to me someone along the way should have realized that this pilot, and possibly others in the squadron, did not have the competence to correctly fly a PAR approach. I was flying an EC-135J when the accident report was released. The EC- and RC- are both derivatives of the C-135, a Boeing 707. Unlike the KC-135A, however, these airplanes were much heavier and had higher approach speeds. Flying a PAR was a challenge. Many in our squadron knew some of those who had died in the RC-135 and our nonpilots wondered if our pilots were competent enough to have prevented the crash. We pilots, however, had no doubts.

Reading the Signals

Of course, there are countless textbooks, web posts, magazine articles and seminars out there that tell you what not to do so you can avoid the next aircraft accident. The problem is that most operators in organizations that will have that next aircraft accident are blind to those and the danger signs within their own department or group. To them, as to most of us, they are doing everything just right and the next accident will happen to the “other guy.” What worries me, and should worry you, is that other guy could be me or you. Wetherbee’s list of warning signals gives us something to look for:

(1) Does your organization place more importance on the desired results of your mission (getting from Point A to Point B) than on the activity required to do that (flying safely within all known procedures and regulations)?

(2) Does your organization spend time looking for weaknesses and other ways it may be vulnerable to missing something important?

(3) Does your organization earnestly and honestly use assurance programs, such as SMS and FOQA?

(4) Does your organization look the other way at violations of any rules, policies or procedures?

(5) Are your operators competent at what they do?

A Case Study in Progress

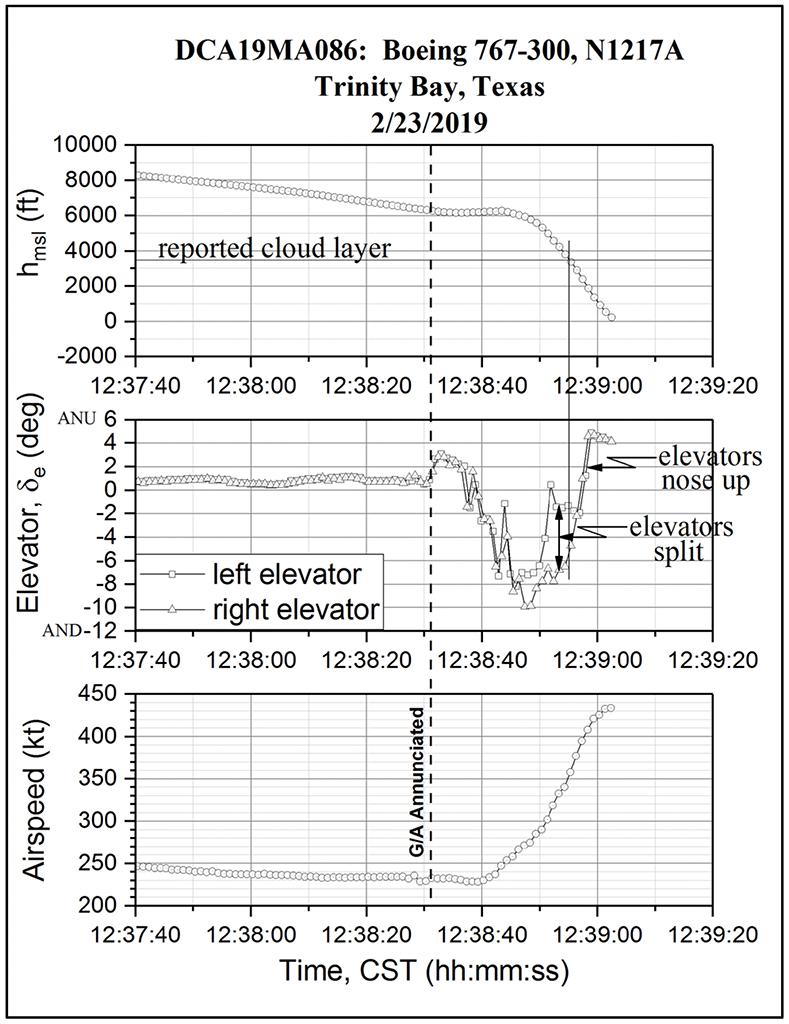

In February 2019, an Atlas Air Boeing 767 plunged into a muddy swamp near Houston-George Bush Intercontinental Airport (KIAH), doing over 400 kt. with the autothrust engaged. While the NTSB has not finished its investigation, it has released its airplane performance study.

It appears that during their descent the takeoff/go-around function of the autopilot was activated, confusing the first officer, who was the pilot flying. He made a comment about airspeed and about the airplane stalling, though all indications were otherwise. Looking at a plot of the airplane’s altitude versus airspeed and elevator position, it appears the first officer pushed the nose down aggressively while the captain pulled back. Once they popped out of the weather the first officer joined in pulling back the elevator but it was too late.

The first officer had a history of failed check rides at Atlas and previous employers. The captain’s record was only slightly better. According to the director of human resources at Atlas Air, the carrier had seen a “tough pilot market.” After looking at these two pilots and their training at Atlas, it’s my opinion that the operator’s hiring standards were low and it trained as best it could with the talent available. I think the culture emphasized filling cockpit seats over producing safe pilots, failed to look for weaknesses in its hiring and training processes, failed to implement or use an effective pilot evaluation system, and failed to ensure the competency of its pilots. In other words, the organization exhibited four of the five warning signals.

Other than landing at the wrong airports a few times and sliding off the end of a runway once, Atlas Air’s safety record was generally good. But no operator can rest on its laurels and consider safety something that is addressed only once.

Years ago, while flying for TAG Aviation, we had a pilot retire who gave us a well-intentioned compliment during his exit interview that hit my flight department two different ways. TAG had well over 200 pilots at the time and double that number of personnel. The retiring pilot had been with TAG almost from the beginning and had been a member of several flight departments. He said at his exit interview that we were the best flight department in which he had been a member in terms of adhering to standard operating procedures. He said that we were “as close to being by-the-book” as he had ever seen.

Half of our pilots were pleased with the statement, but the other half asked, “What do you mean ‘close?’” He was referring to our disregard for 14 CFR 91.211, which requires oxygen use above FL 350 when one pilot leaves the cockpit. Our chief pilot would not budge on the subject. He was fired about a year later and we immediately started flying by the book, even when it came to 14 CFR 91.211.

That was 18 years ago. I am now starting my 12th year leading my current flight department. I worry about whether our organization is placing more importance on the desired results of the mission than the activity required to do that. That is the nature of our business and if it doesn’t worry you, it should.

Comments

Retired Naval Aviator and International Airline Captain.

It is an excellent plea for an effective SMS system

Retired safety regulator